Post-quantum cryptography aims to find schemes that can withstand an attack from a quantum computer. This is becoming an increasingly important field of research as the development of quantum computers intensifies and current cryptography schemes relying on the difficulty of factorisation can be broken in polynomial time by these quantum computers. So, researchers have tried to find other ‘hard’ problems in mathematics (take exponential time to solve) that can be exploited to create classical crypto schemes that withstand attack of quantum computers (at least for now).

In general post-quantum schemes require more resources compared to traditional cryptography, in particular Elliptic Curve cryptography; security against quantum computer attacks come at a cost. There are four different families of post-quantum schemes that are considered to be the best candidates, and in this blog post I will briefly discuss each of them.

Code-Based Cryptography

Traditionally, error-correction codes are used to detect and correct bit errors when messages are transmitted over an unreliable channel. The code can be chosen to fulfil the requirements of the channel; in particular the number t of correctable bit errors can be determined.

Decoding arbitrary codes is computationally hard and can be infeasible depending on the code parameters. However, there are specific codes for which efficient decoding algorithms are known. So in practice only such codes are used that have efficient decoding algorithms.

The main security assumption of code-based cryptography is the difficulty of decoding a random linear code. Even with quantum computers, only exponential-time algorithms are known.

The first code-based public key crypto system was proposed by McEliece in 1978 and has not been fundamentally broken since, however the main problem with this is the size of the keys: it requires key sizes of about 4MB in order to achieve post-quantum security. Niederreiter introduced a scheme that gave some improvements to the encryption and decryption cost and required smaller keys.

There have been many attempts to reduce key sizes by using different codes that have some redundant structure in the public key. But this structure has led to efficient classical attacks on the crypto-systems.

There are also signature schemes, hash functions and random number generators based on code-based cryptography.

Lattice-Based Cryptography

The underlying ‘hard’ problem is the shortest vector problem (SVP): a basis of a vector space V and a norm N are given for a lattice L and one must find the shortest non-zero vector in V, as measured by N, in L. It is believed that the SVP is computationally hard even for quantum computers.

NTRUEncrypt is a commercial public key encryption scheme based on lattices. Public key sizes of about 1.5kB to 2kB are required in order to achieve the desired security. Note that these are the requirements based on the algorithms that have (up to today) been developed to solve the SVP. These restrictions are called ‘parameters’.

Closely related is the signature scheme NTRUSign. However, the security of this is not yet well understood and so there are no recommendations for these signature schemes for post-quantum security.

There are also key exchange protocols that are built on lattice-based cryptography, such as NewHope that has been experimentally adopted by Google. NewHope is not symmetric and needs two rounds of agreement (unlike the Diffie-Hellman Key Exchange). Also, it does not include authentication, so this needs to be achieved by other means.

By switching to post-quantum key exchange now, an attacker in the future does not learn encryption kets even if he breaks long-term authentication keys. Therefore, combining a post-quantum key exchange with a classical authentication scheme provides a cost effective long-term secure authenticated key exchange for the interim period until all cryptographic primitives have been transitioned to post-quantum secure schemes.

Hash-based Cryptography

Hash functions are one-way functions tha tmap bit strings of an arbitrary length to relatively short, fixed length bit strings called hash values. There are three required properties:

- Pre-image resistance: must be hard to compute a pre-image of a hash value i.e. a bit string that once hashed results in a given hash value.

- Second pre-image resistance: given a bit string, it must be hard to find a different bit string that has the same hash value.

- Collision resistance: it must be hard to find two arbitrary bit strings that have the same hash value.

Since by definition it is not computationally feasible to compute the inverse of a hash function it is not known how to construct public-key encryption schemes using hash functions. However, it is possible to construct signature schemes, where in general the required signature size increases if more signatures for the same public key are needed.

Hash-based signature schemes have been around for a long time and so the estimates of the required parameters to achieve post-quantum security are very reliable.

Multivariate Cryptography

This is based on the difficulty of the MQ-problem: solving multivariate quadratic systems of equations over finite fields is NP hard (no efficient algorithm for solving random multivariate polynomial systems). The difficulty depends on the size of the underlying field, the number of variables and the degree of the system. If the number of equations and variables is sufficiently large then even quadratic equations over a the field with two elements are hard to solve.

For constructing an asymmetric public-key scheme, the public-key itself is a set of multivariate quadratic polynomial and the private key often is the knowledge of a trapdoor that allows to efficiently solve the multivariate system. The construction of the scheme allows the use of systems with more variables than equations, so there may be more that one solution. Any of these solutions is a valid signature.

The confidence in multivariate systems is quite high however a relatively large public key is required. Attempts to reduce key sizes by using few variables over larger fields, but the security of such systems is not well understood. The second approach is to use sparse systems in order to compress the public key, but this often leads to a loss in security.

For public key encryption, we need the resulting equation system to only have one solution and so we must have an over-defined system i.e. the public key must have more polynomials than variables. Currently there are not many multivariate public-key encryption schemes that are considered secure.

Multivariate cryptography can also be used for pseudo random-number generators, cryptographic hash functions and symmetric encryption, e.g. the symmetric block gopher QUAD.

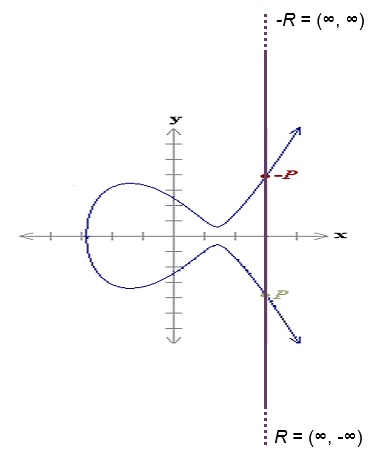

What about Elliptic-Curve Cryptography?

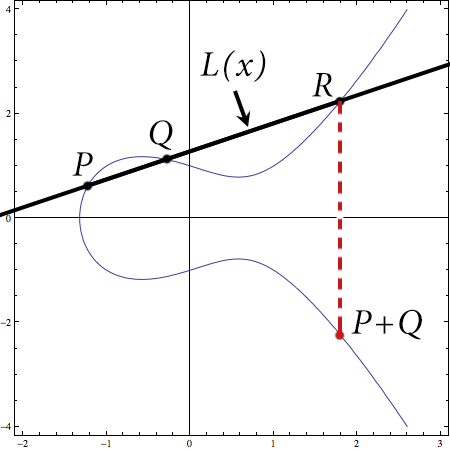

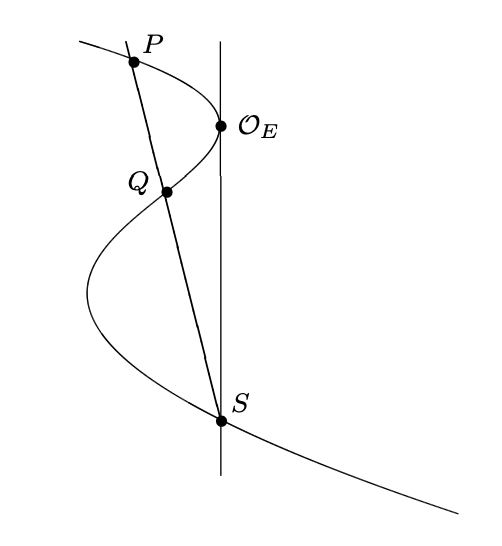

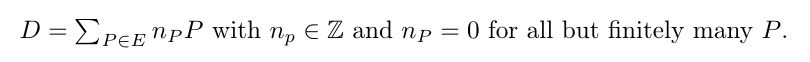

I have talked about elliptic curve cryptography in previous blog posts, so why isn’t this mentioned?

Due to the novelty of these cryptographic schemes there is not great confidence in them yet. So they are not currently consensually considered as candidates for post-quantum public-key encryption.

This is the first installation in my series about post-quantum cryptography. Anything you want me to talk about in particular? M x